AI is harmless. Or is it?

I came across an altered, more suggestive photo of myself online, which made me rethink what it means to use AI appropriately.

Last week, I came across a photo of myself on LinkedIn with what looked like my bra peeking out from my shirt. Initially, I was horrified (by my own sloppiness), then shocked (believing someone had manipulated my photo to unbutton my blouse), then curious (when I learned that AI had been responsible for this unintentional blunder).

Before this experience, I didn’t have particularly strong opinions on AI model biases, misinformation, or ethical considerations. These doomsday extrapolations seemed pretty distant from my mundane use of ChatGPT and Claude.

But the person who used AI to modify my photo was just using a simple image extension tool to do her job more efficiently.

So this is precisely where we all need to pay attention.

It’s our job, as humans, to use AI appropriately.

Don't get me wrong. I'm excited about the proliferation of easy-to-use AI tools. But this experience has opened my eyes to why training data matters, why tool design matters, and most importantly, why how we use these tools matters.

I shared my experience online. More than 2M people saw it and thousands weighed in with their support or their scorn. This barrage of reactions gave me even more to think about…

If you want more details of my experience or wonder why I was so quick to forgive everyone involved in this snafu, read on.

I agreed to speak at Upscale’s AI + Creativity Conference. A week ago, I came across this promotional image on Linked In:

I don’t think most people would have even thought twice about this image. But I puzzled over it for a minute. Something wasn’t right. OMG, was my bra showing? Had it always been showing in my profile photo? I did a quick check. Nope, nothing was showing in the profile photo I had sent the conference organizers.

So did someone edit my photo to make it look sexier?!! I was appalled. I sent the conference organizers a note to say this was unacceptable and super creepy. They responded right away, apologized, immediately took down the content, and quickly got to the bottom of what happened.

It turned out that an employee had innocently used Gen-AI to modify the image proportions to fit her needs for the promotional material. As I learned more about what happened and walked through the Gen-AI tool myself, I started to realize just how crazy this all was…

What happened?

When I sent the conference organizers my profile photo and bio, they were forwarded to someone who posted them to the conference website.

Separately, another employee, Ana (name changed for this story) was asked to create promotional content to highlight some of the speakers on social media.

She had access to the speaker photos and bios from the conference website. These photos had all been cropped square.

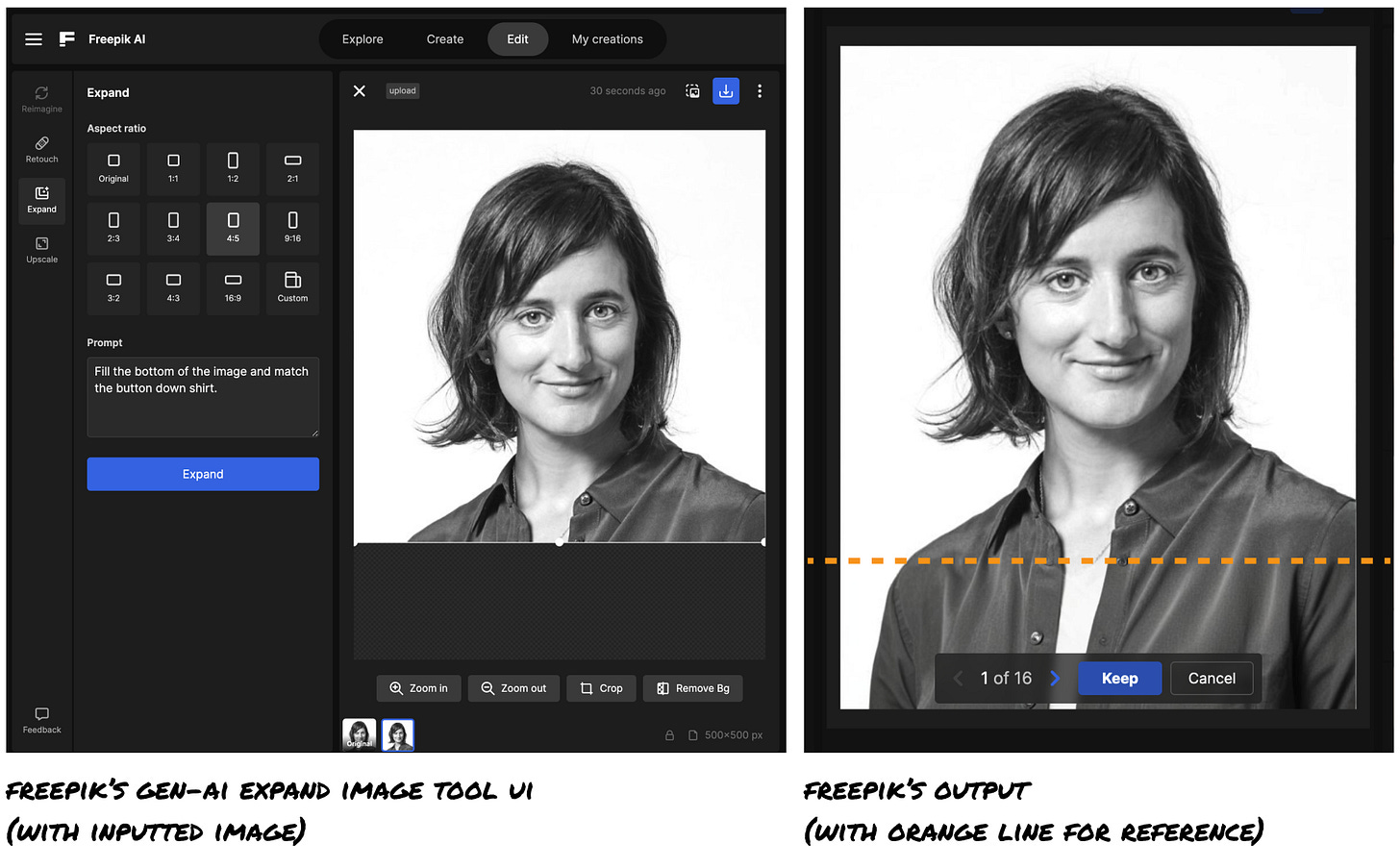

But Ana wanted a vertical image for the promotional materials, so she used Freepik’s Gen-AI image expansion tool to modify the dimensions. At a glance, it generated a very reasonable-looking image.

Note 1: As I replicated this flow, not all of the generated images were so reasonable (adding buttons to skin, not closing the shirt at all, adding a tie/ ruffled blouse, etc.) So Ana chose well!

Note 2: The overlay for “<N of 16> [Keep] [Cancel]” at the bottom of each image should not overlay any part of the freshly AI-generated part of the image. Users should always be able to fully see the modified part of the image, so they can see any oddities.

What is amazing is that with the click of a button, AI changed the image proportions to what Ana needed (in a matter of seconds) and made it look like a natural extension of the image.

Before AI, Ana would have had to have done one of the following:

Work with the square photo.

Create a taller image in Photoshop (laboring to make things look decent).

Ask her colleagues/manager if they had a different version of the photo.

Ask me for an alternate photograph.

While none of these alternatives are unreasonable, they are slow, inefficient, and rely on other people. I totally understand why Ana turned to AI — it was the path of least resistance.

What was the issue?

But here’s the issue: Regardless of whether you think the AI modified photo is fine, it is not how I would have chosen to represent myself professionally.

Helena Price took the original photo. I sent that photo to the conference organizers. I didn’t care at all that the photo was turned to black and white or cropped square for the website. I did care, though, when I saw myself in a shirt I never wore, exposing more chest (or undergarments) than I ever would have chosen to do.

While Ana’s use of AI was innocent (and felt similarly technical to cropping an image), it did have unintended consequences.

What’s the lesson in this?

This experience highlights the challenges with using AI for seemingly innocuous, mundane tasks.

AI models will remain imperfect and the tools built on these models will become increasingly woven into our daily lives. This makes it incredibly important for us to think critically and use our judgment whenever we are using AI.

Anyone using AI in any capacity should:

Consider if AI is a good tool for the task. Are there any unforeseen risks? Any equally good/better alternatives?

Critically evaluate the output. Is this what I expected? Does it show unintended bias?

If relevant, ask for consent. If the output stemmed from original material or has a strong likeness to something else that exists, check in with those impacted.

It’s our responsibility to adapt and learn to use AI appropriately.

Such good post, on the issues with using AI tools. Quick question, what would have Ana pre the AI tools?