Beyond the Chat Box: AI's Interface Evolution

From behind-the-scenes, to chat windows, to what's ahead for AI interfaces.

ChatGPT's launch in late 2022 marked a new chapter for AI. But it’s important to remember it wasn’t our first experience with AI.

Long before ChatGPT, AI powered numerous consumer-facing features like personalized recommendations for Netflix, social feeds for Instagram, facial recognition for Google Photos, demand pricing for Uber, fraud prevention for credit cards, and so many other features across major products.

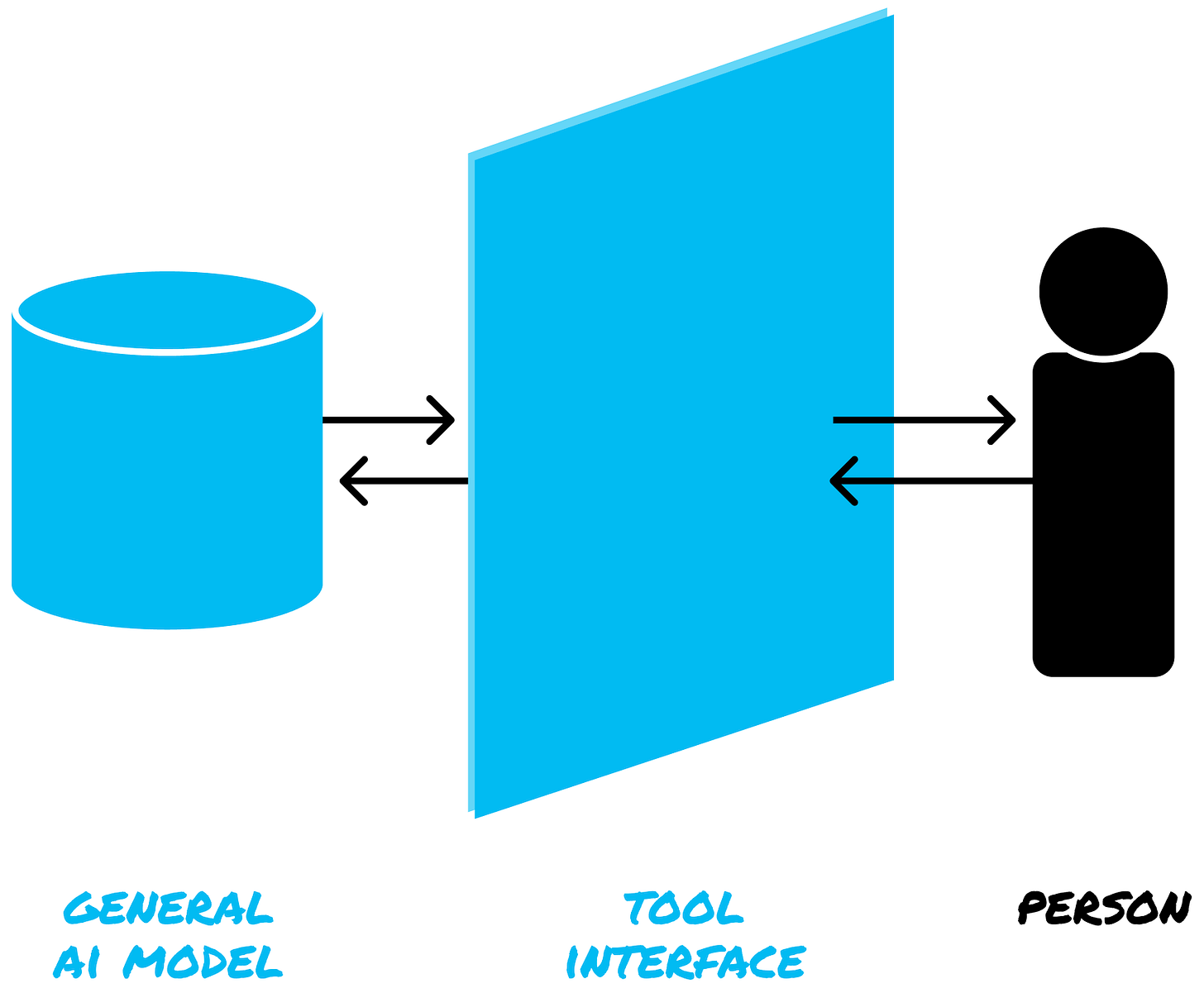

The difference is that we did not directly interface with this AI. We interfaced with products like Netflix or Uber, which happened to use specific AI models to fuel some of their features:

Google's facial recognition might be nearly 100% accurate, but it couldn't help with pricing or fraud detection.

These AI models were trained specifically for these applications and honed to perform a particular task very well. But they didn’t translate to other uses. e.g., Google's facial recognition might be nearly 100% accurate, but it couldn't help with demand pricing or fraud detection.

All purpose AI + UI

Everything changed when Midjourney and ChatGPT arrived. AI itself became the product, rather than just a behind-the-scenes feature. Suddenly, we had direct access to general-purpose AI through a simple chat interface, so our experience started to look like this:

Today, we have one UI primitive: the chat box. We are in an early exploration phase — experimenting with UIs and learning:

What are the minimal inputs needed for useful outputs

Which tasks these inputs can support

How interfaces should adapt for different interactions

What obstacles users face

Where the potential risks lie

This is the pivotal moment where we get to define the next generation of AI interfaces. These opportunities to reshape how we interact with technology are rare. One notable parallel is how Gmail revolutionized email interfaces in 2004.

Before Gmail, email interfaces were direct translations of physical mail into digital form (with an inbox, file folders, fixed storage capacity, and a trash can). When Paul Buchheit, Kevin Fox, and their team at Google approached the challenge, they completely reimagined what was possible in a digital-first world. Their creativity launched paradigm-shifting features like search, conversation threading, and unlimited storage.

New UI approaches

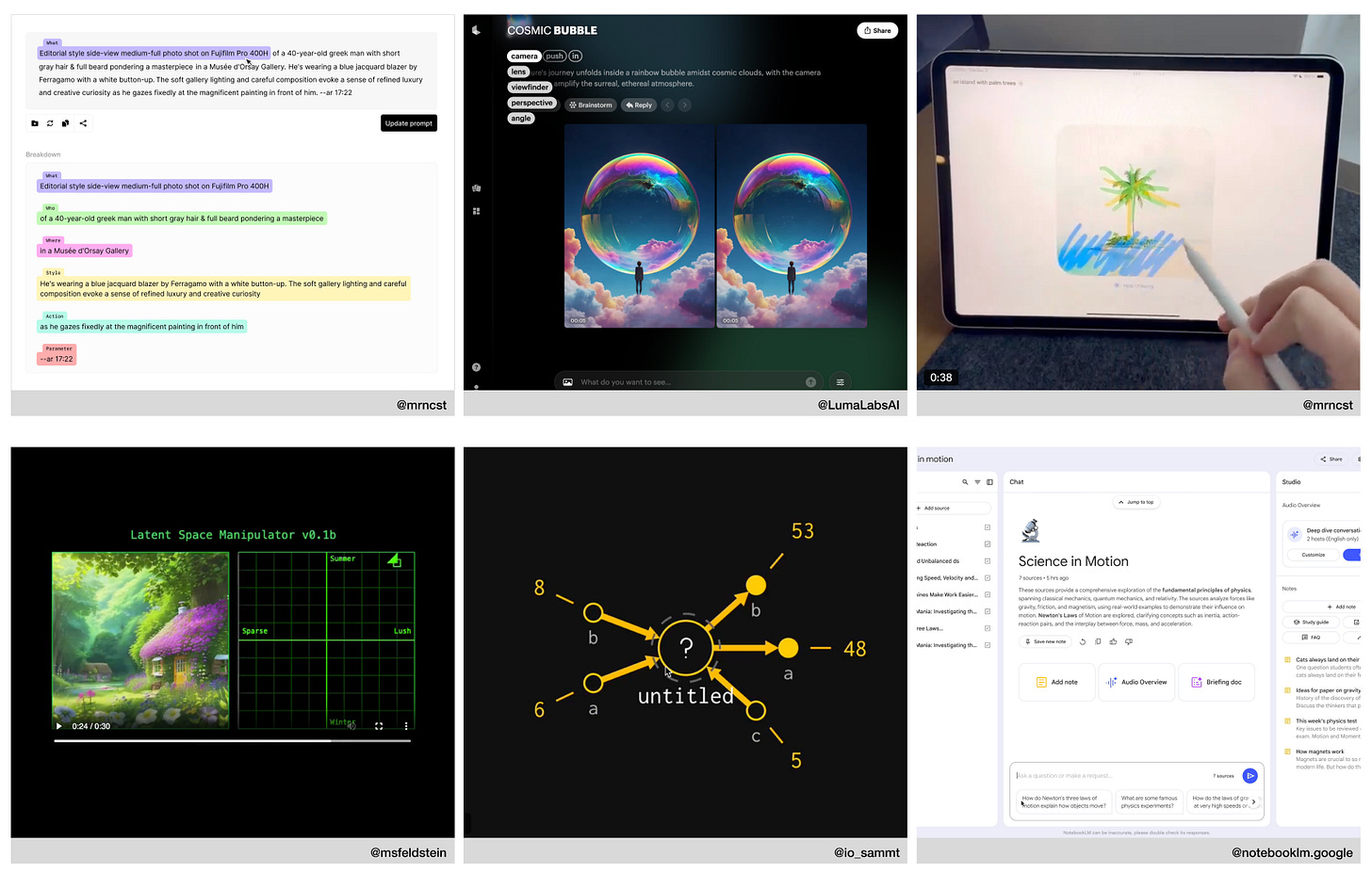

We are at a similar turning point with AI interfaces. Just as email interfaces initially mimicked physical mail, most of our current AI interfaces mirror traditional chat messaging. But today’s AI tools are evolving from general purpose platforms into supporting more specific tasks, and we are starting to see the first wave of innovation beyond the chat interface.

Des Traynor recently shared an excellent overview of emerging AI interfaces. Some new approaches include:

Visual tools that help users refine their prompts

Sketch-to-image generators that combine drawing with text input

Interfaces that handle multiple information streams at once

Tools designed for specific workflows and systems

But these early explorations are only the beginning. Just as Gmail moved beyond the "digital mailbox" metaphor, AI interfaces will evolve past the simple chat box to create entirely new ways for humans and AI to work together.

Where do direct brain-interface technologies fit in?